The data analytics market has evolved rapidly, applying algorithms to larger datasets on a more frequent basis by a wider group of people and practitioners. Many of the sophisticated algorithms deployed are now more than 50 years old, but the recent ubiquity of almost limitless data storage and compute power, and improved development tools, has enabled greater scale today and for the future of data analytics.

Data analytics systems have advanced to apply more complex analysis in a much more responsive manner. Analytics remains all about creating insight and providing answers to important questions, which businesses can exploit to achieve improved outcomes. Contemporary systems now apply machine-learning to more diverse datasets, where the analysis is driven to a larger extent by the computer rather than the operator. The real-time arrival of new data enables insights to be discovered as soon as they occur.

Companies looking to make better use of data

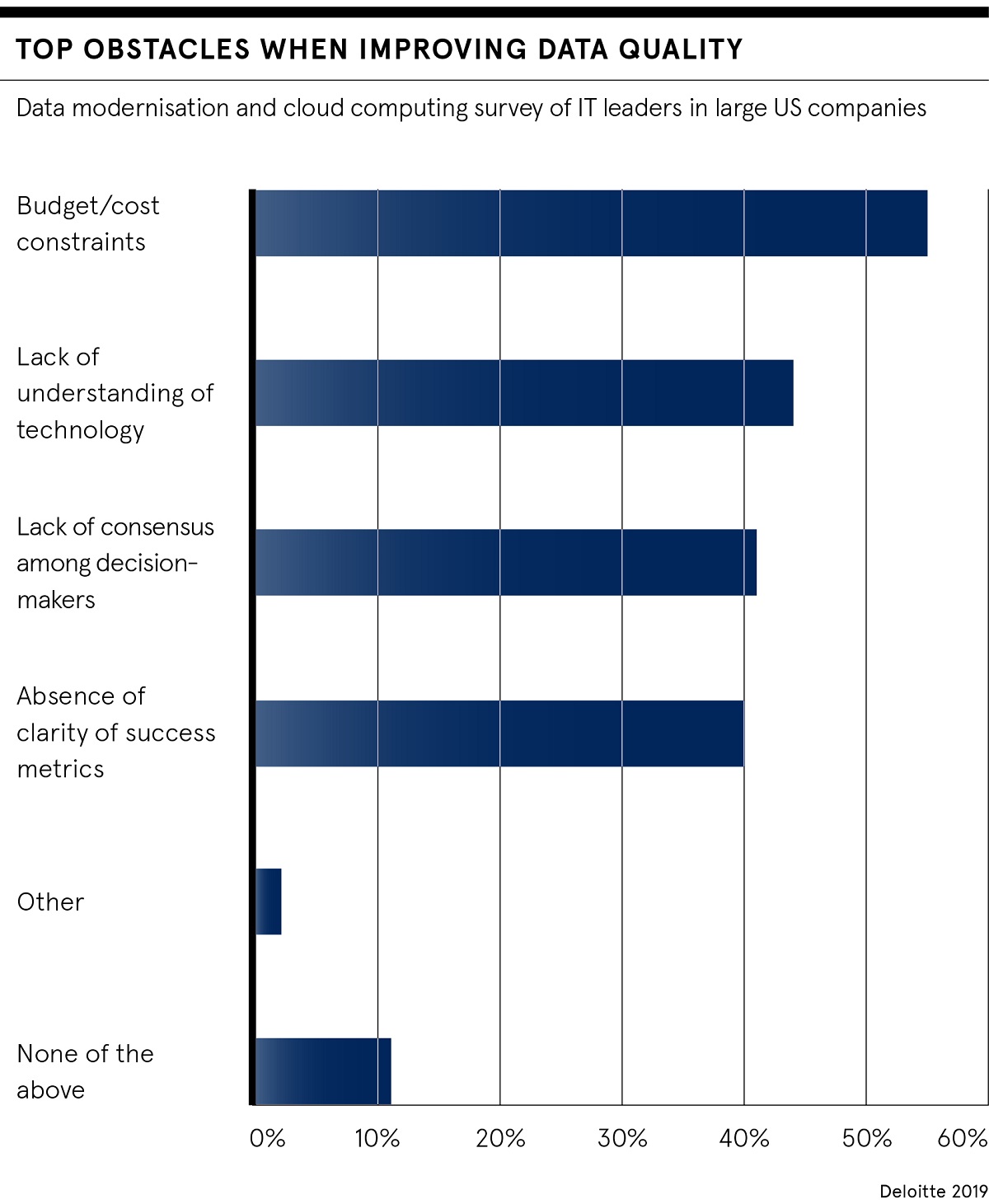

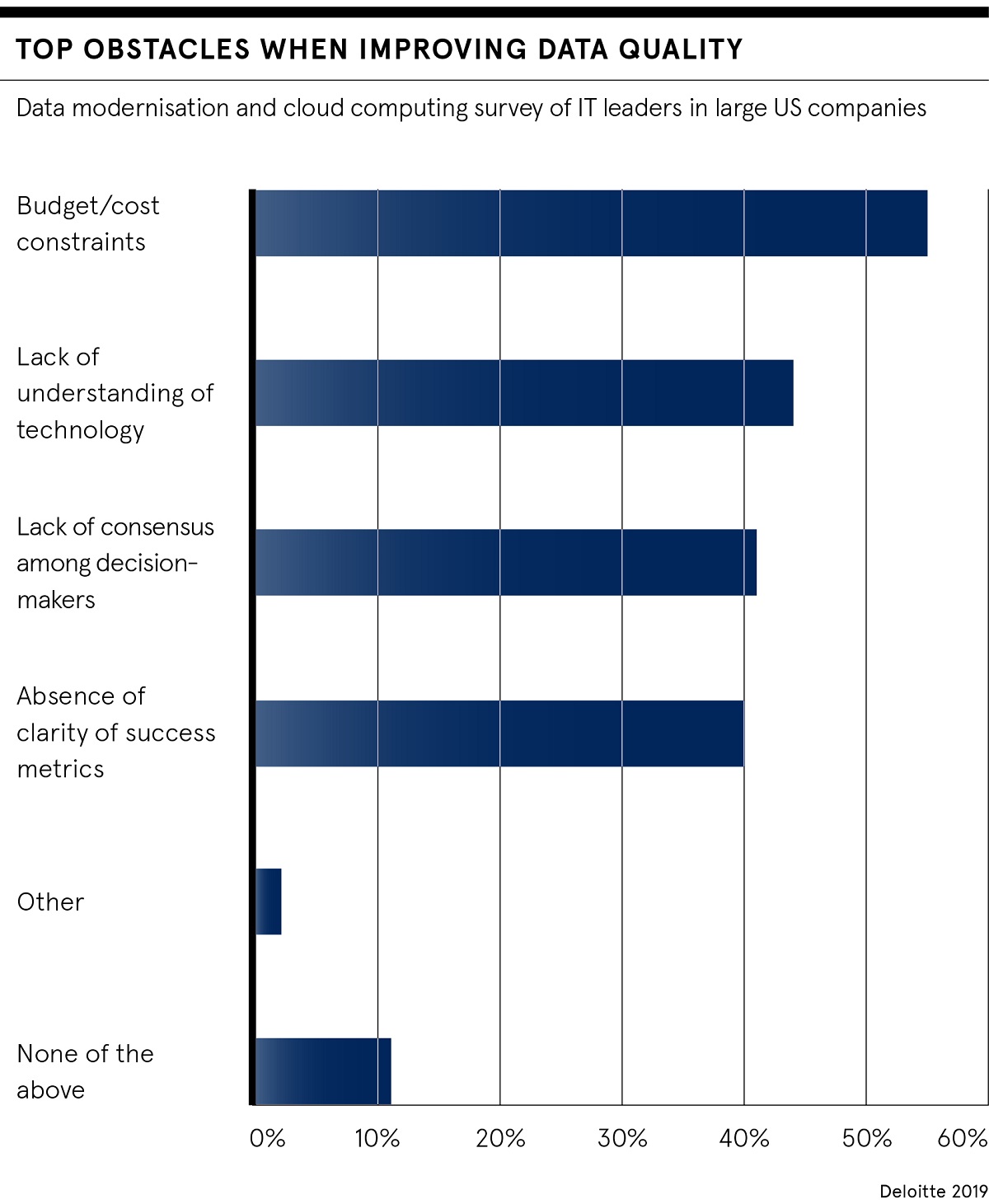

Historically, the demand and needs of corporate data have been less onerous. But as companies now look to leverage their data, rather than just record it, a rapidly growing major issue is the veracity of that data. The quality of the analysis conducted is only ever as good as the quality of the data fed into a system. Increasing the quality and management of data has not yet been a requirement, but this leads to the creation and use of data that isn’t up to scratch for the type of analytics companies need to develop.

Analytics tools and technologies are to a large extent commoditised, but the single biggest challenge for organisations to exploit them is the creation and availability of adequate datasets. Establishing these foundations is typically a particular problem in organisations that have not historically fostered a data-centric culture.

“Sometimes relevant data doesn’t exist and when it does its location is often poorly understood, riddled with quality issues and spread across multiple systems of record,” says Paul Fermor, UK solutions director at Software AG. “The majority of organisations that have realised the potential value of their data are engaged in substantive projects to improve its quality, real-time availability and integration across systems.”

Is cloud the future of data analytics?

Senior leaders want data analytics capabilities for their organisation, but can often become frustrated with slow progress due to the underlying limitations of their existing core data infrastructure. This is particularly common in companies that have grown through acquisitions, leading to fragmented technology, teams and cultures.

The rise of cloud has helped resolve data infrastructure scalability concerns, providing data analytics software as a service. Cloud has ensured the latest tooling is readily available without the need to maintain and patch, while traditional database administrators can build machine-learning models without the knowledge required just a few years ago.

“Cottage industries and data fiefdoms will gradually disintegrate; the future of data analytics is in the cloud,” says James Tromans, technical director at Google Cloud. “Those with the correct clearance can quickly start applying advanced data analytics to a valuable business problem in a way that simply wasn’t possible previously.”

Sometimes relevant data doesn’t exist and when it does its location is often poorly understood, riddled with quality issues

The injection of new technical capability into organisations means their ability to transform with cloud infrastructure is becoming much easier. Increased availability of the infrastructure that algorithms reside in means major cloud providers are racing to add on related technology such as live streaming or security. As well as the analytics, they want to cover the control and regulatory compliance of the data.

Smart data analysis key to beating competition

An example of a company already combining machine-learning and data analytics in the cloud is Ocado Technology. Partnering TensorFlow with BigQuery, Ocado developed a mechanism for predicting and recognising incidents of fraud among millions of other normal events using data collected from past orders, as well as cases of fraud. Creating a reliable model, Ocado improved its precision of detecting fraud by a factor of 15.

“Business and industries are being disrupted by cheaper, more agile competition. How they respond is based on how they are able to manage and exploit their data,” says Nick Whitfeld, head of data and analytics at KPMG UK. “The culture around data needs to change. Organisations need to fully understand that if the quality of data at the point of creation is poor, this will undermine investment or focus in the future of data analytics.”

Mr Fermor at Software AG believes the future of data analytics will address harder problems, such as creating more human-like machines. “This might manifest itself in more convincing chatbots and artificial intelligence assistants, or improved medical diagnosis tools. There are also efforts to automate the machine-learning process, which is still driven by humans, and create a less technical, self-service approach to creating and deploying sophisticated models,” he says.

Organisations that want to become data enabled must evaluate their skills and operating models in the future of data analytics. Ensuring they are able to process and exploit data quickly will require an ecosystem of talented people, who are geared up to work at an unprecedented level of accuracy.

Companies looking to make better use of data

Is cloud the future of data analytics?

Smart data analysis key to beating competition